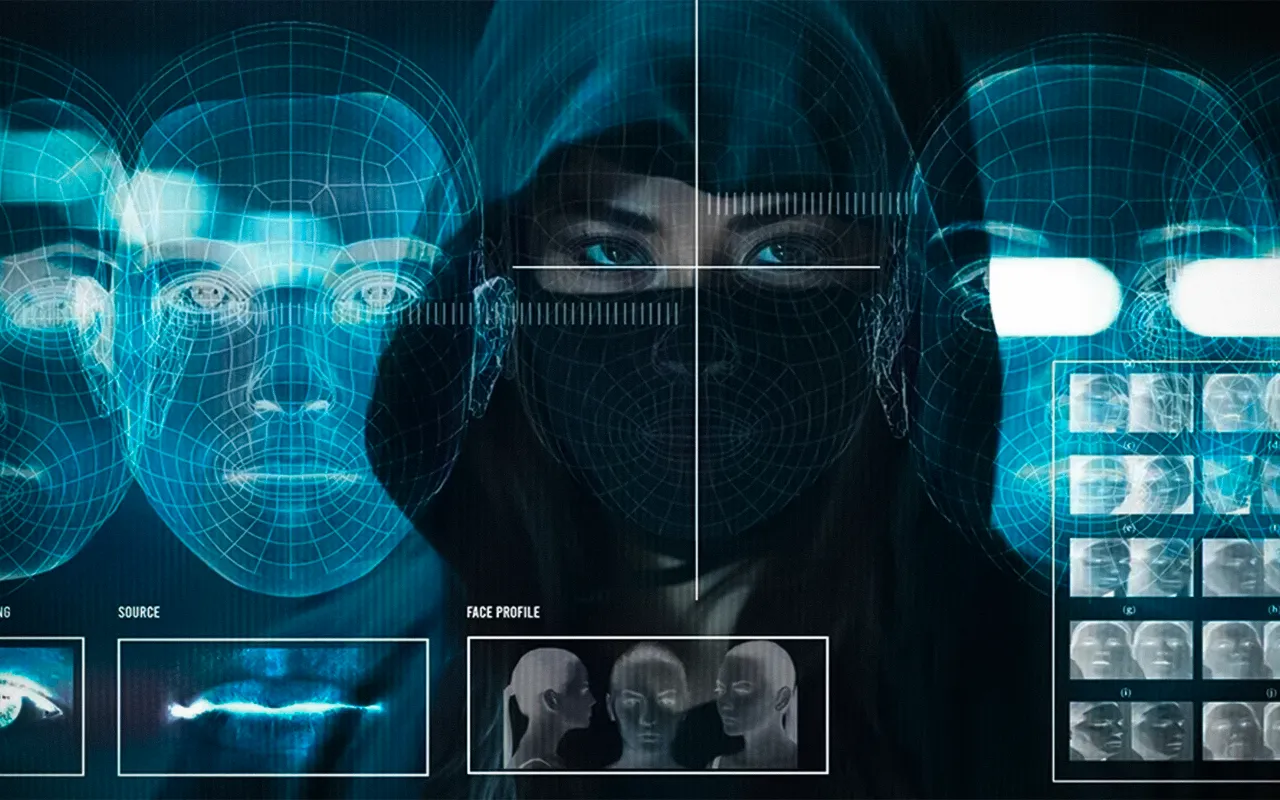

Most recently, cyberfraudsters have started using a hauntingly sophisticated form of fraud known as “deepfake” scams that uses artificial intelligence to compromise financial and accounting information. Such deepfake attacks—that spoof the trusted by manipulating video and audio—are skyrocketing. One recent study has recorded a whopping 2137% increase in attempts at deepfake-related scams, urging the need for greater cybersecurity measures across industries.

What is a Deepfake Scam?

Deepfakes are using AI to generate very realistic but artificial content in media. Scammers can use videos, photos, or audio for impersonating CEOs, finance officers, or other public figures in a company to obtain financial information. The impersonation often takes the form of using high-ranking officials of a company to authenticate fraudulent transactions, alter financial statements, or gain confidential accounting details.

In 2024, Deloitte also reported that according to a survey, more than half of C-suite executives see the rise in deepfake scams against their financial operations within the next year. From the report, 26% of the businesses affected by deepfakes said that financial and accounting data were the most targeted.

Deepfake Has Become Particularly Rising in Attacks

Deepfake attacks are now on the rise due to the increasing availability of AI tools. With the development of the new generative AI that can create fake identities or videos with very minimal resources, fraudsters have been enabled to launch large-scale attacks with minimal investment. According to the experts, Deepfake fraud attempts increased by 3000% in 2023, with cheap and easy-to-use AI tools as one of the causes for this sharp spike in cases.

The most striking case came in 2024, when a UK firm lost £20 million after being conned by a deepfake. A deep fake video so well designed that a fraudster was able to masquerade as the CFO of a company who appeared during a virtual meeting to one employee, authorizing a forged transaction.

Financial Loss

The financial loss caused by deepfake scams is significant. Such big businesses and small ones lost a whopping $480,000 in 2023 due to these frauds. But the potential damages have certain specific issues in the industries dealing with large amounts of money or sensitive financial data such as banking and insurance, corporate finance. In the UK, last year, the Financial Conduct Authority called deepfakes from AI an existential threat to the financial sector.

Although many organizations understand the growing threat, many are not prepared with the proper skills, budget, or technology to adequately confront the abuse of deepfakes. Indeed, approximately three-fourths of respondents reported that such constraints prevent them from putting in place adequate defense mechanisms.

How Deepfake Scams Work

Typically, financial data scams using deepfakes require a highly sophisticated planning scheme. Fraudsters will normally deploy advanced forms of social engineering to gain the confidence of employees or executives before unleashing the deepfakes. In extreme cases, some even create audio or video deepfakes of an executive instructing an employee to send or share some sensitive information about the financial side of the organization. Because of the level of sophistication of the technology, most of the employees miss the trick until it’s too late in the day.

One of the most common kinds of deepfake-enabled fraud is account takeovers, where fraudsters steal credentials or use weak passwords to take over a business’s account and assume the identity of the legitimate account holder to make unauthorized transactions or otherwise extract financially valuable data. According to new reports, deepfakes represent 6.5% of all attempts at fraud.

Defending Against Deepfake Fraud

Even as the threat from deepfake scams continues to grow, so too has the battle to prevent them. It is likely that only detection tools working on AI, such as “liveness” verification systems, will be able to distinguish between real content and fabricated content going ahead. Since even advanced AI will have their headaches getting these things right, small inconsistencies that won’t show up in deepfakes can be caught; for example, unnatural patterns of blinking and inconsistent lip movements. While some sophistication is being applied to the techniques in the effort of going through fraudsters’ techniques, sophisticated deepfakes are still a challenge to pick out.

Deepfakes are increasing day by day, and cyber experts are going to advocate for a defense-in-depth, layered approach. While there needs to be AI detection systems, there should be employee education and constant updates to the protocols set for cybersecurity. If an organization has already been victimized through deepfakes within the past year, then it will definitely spend money on the education of workers, that is, to identify novel digital attacks.

However, with increasing awareness, surprisingly, very few organizations have actually done something for protection against deepfake scams. A Deloitte survey revealed that 9.9% of executives admitted that their organizations have no safeguards in place for financial fraud related to deepfake.

The Way Forward

But maybe what heralds new times is a thing called deepfake fraud—this new norm of cybercrime, where AI-generated deception may be used as the leading weapon. Experts believe that with the advancement of AI technology, deepfake scams will get better at transcending the line separating real from fake media. As such, financial institutions, corporations, and government agencies have to invest in AI-driven detection systems and elaborate fraud-prevention schemes.

This battle will not come to an end anytime soon, but there is hope. Only those organizations that take a proactive approach—including installation of advanced AI tools and employee awareness enhancement—stand a better chance to defend themselves against the newfound threat. The risks of deepfake scams, financial as well as reputational, cannot be ignored at all, and with fraudsters needing to use all the technological advancement to their advantage, businesses need to evolve further.

Cooperation between AI and cybersecurity will be the future safeguard for the integrity of financial systems. The battle will go to businesses: to outpace the criminals, who are increasingly depending on AI as a method of deception. Severe threats are raised with deepfakes, but it also opens up innovative solutions for combating frauds.